17

01

.23

Adaptive Interactive Movies – final blog

The overarching aim for this research project was to explore how, from viewing an audience’s real-time reactions to cinematic narrative content, could a computer vision system imply ongoing engagement and use that to steer the narrative. In order to do this we studied viewers personalities, their viewing habits, how much they emoted while watching film content and created an interactive film for audiences to watch and interact with.

Cinema in its most basic form will steer the viewers’ attention and emotion. For a film to be seen as well made, it should make the audience feel the same emotions at the same time. There are other types of cinema which relies more on individual interpretation and so does not rely on group affect. Ramchurn designed Before We Disappear to have morally ambiguous themes, to encourage a variety of responses. Ramchurn wanted to make a film that explored the anger that exists around climate injustice. Exploring the idea of holding to account the corporations and systems that have caused the crisis.

Ramchurn has created several films that have been used in research; in The Disadvantages of Time Travel, every frame of the film was adapted to the viewer, in his second film, The MOMENT, each cut in the film was based on the viewers attention. In Before We Disappear the scenes are re-configurable. Before We Disappear has 3 possible endings, and around fifteen narrative constructs.

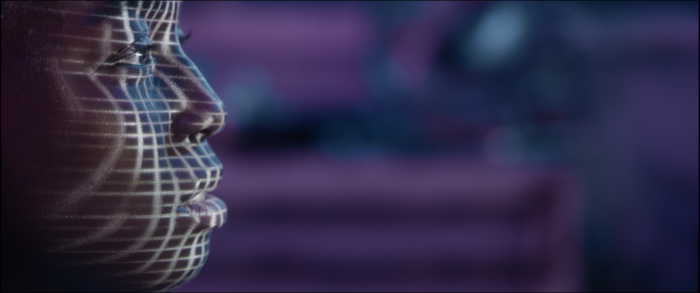

The interactive concept was to create a film that adapts to its audience. Unlike the traditional concept of interactive films, such as Bandersnatch the audience doesn’t actively choose what will happen, but by using a camera and computer vision, it adapts the narrative on how it perceives the observed audience feels.

We have partnered with the Nottingham University spin-out company BlueSkeye, who develop machine learning, computer vision applications to assess mood among other physiological data. We are adapting their technology which reads audiences positive and negative emotion, (Valence) and emotion strength (Arousal) to adapt the film in real time. We are developing various algorithms that will change the narrative based on how the system interprets the viewer’s changing emotional state.

To make sense of the Arousal and Valence data which would be read from people’s faces, we asked several questions. What do filmmakers intend for the audience to feel? What can the computer system observe? And how could that data be used to make a binary choice as to which scene will play next?

To discover the intended emotion, researcher Mani Telamekala wrote an open source mark-up program, which we had filmmakers manually mark up what they thought the intended viewer emotion was (valence and arousal) for specific scenes.

We choose clips from 16 genre films, which were representative of the preferences of our genre study between 1 and 3 minutes long. We asked participant to watch those clips while recording their faces. These recording were put through start up BlueSkeye’s BSocial application and we extracted Valence and Arousal data. This has given us an idea of how Valence and Arousal Data varies between people and clips, and within the clips themselves.

We will be using this data to develop algorithms for audiences to interact with the adaptive film Before We Disappear.

The ambition for the technology and film is to distribute it as an app so that audiences can access the film.

Before We Disappear will be screened at the Broadway cinema in Nottingham as part of a two-day interactive cinema workshop.

Tags:

climate,

computer vision,

filmakers,

interactive cinema