05

07

.16

Digitopia Experience – Musical Composition 2

The Digitopia experience premiered on 12 February 2016 at Lakeside Arts Centre, Nottingham, alongside Digitopia the stage show. Digitopia is currently touring to 16 UK venues in total. It was developed in close collaboration with Nottingham-based Tom Dale dance company. Previous posts have covered the Digitopia Premiere, the collaboration with Tom Dale company, the initial design stages, the final design, the technology used and initial ideas about the musical composition. Here we reflect briefly on the final stages of the musical composition.

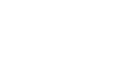

Each tablet could load the appropriate midi file and play each track when requested. The default state would be to mute each track until the placement of a line on the pyramid initiated the music. This, however, relies on the fact that each track may be muted in real time and started again when required. Although this may seem a relatively simple requirement, the midi library did not function in this manner. The midi.js library takes all the midi data, converts it into a form that may be played within the browser and then sends the notes to the audio card with an appropriate indication of when to play them (in essence a time delay for each note). This means that should you require the stream of notes to be muted there will be a considerable delay as to when this takes effect – once the notes have been processed by the audio card (or have been sent to the audio card for processing) then they are effectively not available and cannot be muted. The muting action will only affect those notes not yet sent, hence there will be a delay. This is obviously not acceptable in a situation where we need real time interaction.

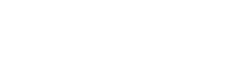

Therefore a major part of the music development was rewriting the software such that all the notes were sent to be processed at approximately the time that they were required to play. Hence if we needed to mute the stream then this would occur immediately. We were now in a position to read in the file and play the notes as requested, whilst muting/unmuting would occur whenever the user placed/removed a line on the pyramid. In order to give the user a different experience at each tablet that they interacted with, we created individual files for each device that utilised differing instruments to play the midi tracks.

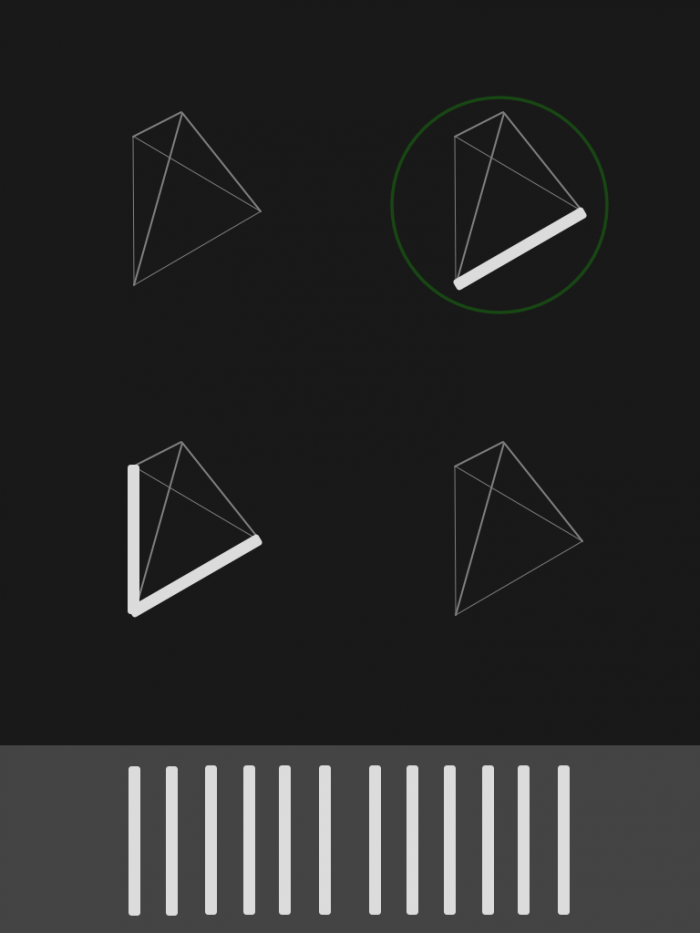

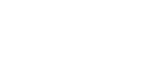

Although the basic functionality was now in place we were still eager to add some more interactivity in addition to just dragging the lines onto the pyramid. The original brief also expressed the desire that participants should be able to interact with the musical structure after the initial interaction had occurred. For this aspect of the experience we added the ability to manipulate the structure to alter or distort the currently playing sounds. This would involve the users somehow taking hold of the pyramid shape and moving it around, where the movements corresponded to the amount of distortion taking place.

The circular nodes that were added in the original design were then altered slightly once any lines had been added. Any node that was in contact with an added line would turn blue indicating that it may be moved. Moving the nodes would then allow for the distortion. Once again moving the structure was relatively straightforward but what attributes of the sound could we feasibly alter in real time? This was also further complicated by the fact that the changes had to be obvious enough to be heard and ideally movement in different directions would give differing degrees or types of distortion.

After some experimentation, we decided that horizontal movement would shift the pitch of the notes and vertical movement would apply a filter. Hence all the movements could interact and have a combined effect on the overall output. This ended up working really well, especially the pitch shifting element. This has an obvious effect on the music and creates some really interesting sounds.

The Digitopia team: Tony Glover, Adrian Hazzard, Holger Schnädelbach, Laura Carletti, Ben Bedwell

Tags:

children,

Digitopia,

interaction,

interactive design,

Media Flagship,

performing data,

theatre,

visitor engagement