22

02

.23

Studying effect of media polarization in disseminating net zero policies – midpoint blog

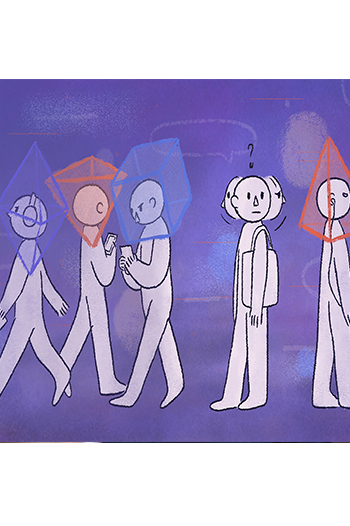

Recommender systems determine, to a large extent, the amount of information, news and even art we are exposed in the digital space. This is why at Horizon, we wish to better understand the impact different recommender systems’ designs have on how polarized and how knowledgeable we are about crucial environmental topics which will shape the net zero policies in decades to come.

Roughly halfway through our Net Zero Recommender systems project and in collaboration with geology experts, we have setup two surveys aiming to examine how comfortable are different groups of participants with their understanding of mining of important materials for the net zero transition. In addition, how does the recommender system ranking of information sources impact their sentiment towards mining.

Many developed economies do not rely on mining for substantial parts of their GDP and community growth, hence they are likely to know little about the role of mining in reducing carbon emissions. At the same time, the main way the public would get informed about both emerging policies and technologies would be via machine learning powered search engines (i.e. powered by recommender systems). This is why we are convinced it is important to better understand whether the priori sentiment of an individual regarding a topic is likely to pre-determine the language used during their information search, and in turn determine the confirmational set of sources they would discover. Our study will examine how likely different recommender systems designs are to sway the initial sentiment of their users and reduce or exacerbate user polarization post factum.

Where it all started: Interestingly enough to a large extent, the motivation behind our study started from our background in healthcare. When developing machine learning tools deployed in clinical practices to aid the diagnosis of different health conditions, we noticed that AI technologies can be often biased by spurious correlations, present in the datasets used for training Even if you designed a system which seemingly is highly accurate at remotely assessing Parkinson’s disease, your system might be simply learning behavioural patterns more common amongst: elderly population, individuals with mental health, people with uncommon exercise routines. Mitigating those risks requires placing a lot of logical constraints in the form of causal assumptions when designing your AI system. However, going beyond healthcare, AI now largely powers most of the information flow we are exposed to in: news outlets, social media and Google/Youtube searches. How robust, logical and user-beneficial are those AI systems and how much they are likely to be biased due to insufficient mechanistic constraints? To make a small start in attempting to answer parts of these questions, we will be rolling out our study to 250 participants and cannot wait to share our insights into how much search engines affect our knowledge, confidence and sentiment about important topics such as our sustainable future.

Net Zero Recommender systems project introduction blog

Tags:

Artificial Intelligence,

carbon emissions,

digital,

GDP,

machine learning,

Net Zero,

polarization,

recommender systems,

social media,

technologies